プロンプトの書き方がわからない?こちらを見てください!

プロンプトの書き方がわからない?こちらを見てください!

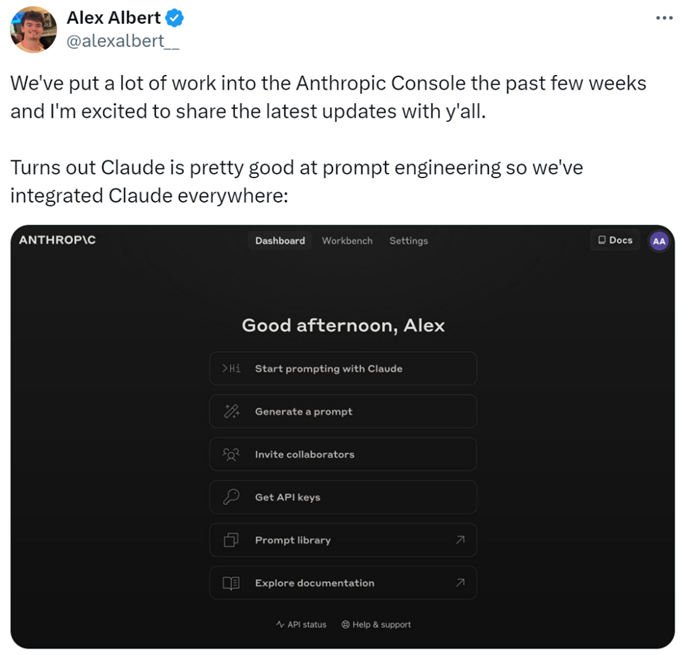

AIアプリケーション開発の領域では、プロンプトの質が結果に大きな影響を与えます。しかし、高品質なプロンプトを作成することは難しく、研究者はアプリケーションのニーズを深く理解し、大規模言語モデルの専門知識を持つ必要があります。開発を加速し、結果を改善するために、AIスタートアップのAnthropicはこのプロセスを簡素化し、ユーザーが高品質なプロンプトを作成しやすくしました。

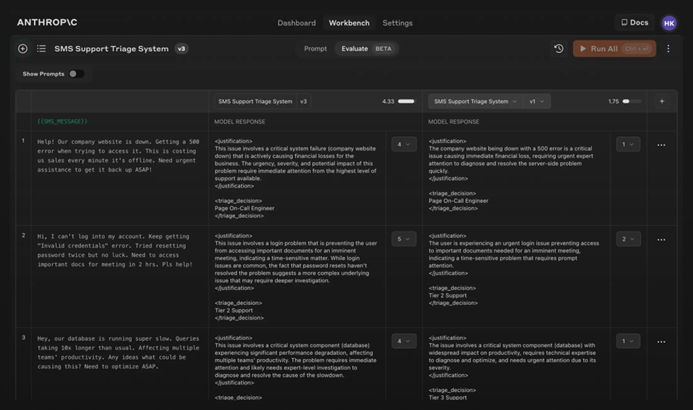

具体的には、研究者たちはAnthropicコンソールに新機能を追加し、プロンプトの生成、テスト、評価を可能にしました。

Anthropicのプロンプトエンジニアであるアレックス・アルバートは次のように述べています。「これは過去数週間の大きな成果であり、今やClaudeはプロンプトエンジニアリングにおいて優れています。」

難しいプロンプト?Claudeに任せよう

Claudeでは、良いプロンプトを書くことはタスクを説明するだけで簡単です。コンソールには、Claude 3.5 Sonnetによって駆動される組み込みのプロンプトジェネレーターが含まれており、ユーザーがタスクを説明するとClaudeが高品質なプロンプトを生成します。

プロンプトの生成:まず、「プロンプトを生成」をクリックしてプロンプト生成インターフェースに入ります:

次に、タスクの説明を入力すると、Claude 3.5 Sonnetがそのタスクの説明を高品質なプロンプトに変換します。たとえば、「受信メッセージをレビューするためのプロンプトを書く...」と入力し、「プロンプトを生成」をクリックします。

テストデータの生成:ユーザーがプロンプトを持っている場合、そのプロンプトを実行するためのテストケースが必要になるかもしれません。Claudeはそのテストケースを生成できます。

ユーザーは必要に応じてテストケースを修正し、1回のクリックで全てのテストケースを実行できます。また、各変数の要件に対するClaudeの理解を確認および調整でき、Claudeのテストケース生成に対するより細かい制御が可能です。

これらの機能により、プロンプトの最適化が容易になり、ユーザーは新しいプロンプトのバージョンを作成し、テストスイートを再実行して結果を迅速に改善できます。

さらに、AnthropicはClaudeの応答品質を評価するために5段階のスケールを設定しました。

モデルの評価:ユーザーがプロンプトに満足している場合、「評価」タブでさまざまなテストケースに対してそのプロンプトを実行できます。ユーザーはCSVファイルからテストデータをインポートするか、Claudeを使用して合成テストデータを生成できます。

比較:ユーザーは複数のプロンプトをテストケースに対して比較し、より良い応答にスコアを付けて、どのプロンプトが最もパフォーマンスが良いかを追跡できます。

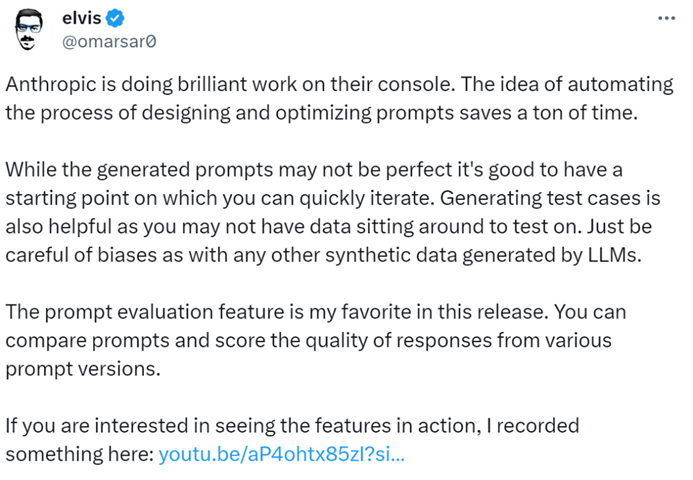

AIブロガーの@elvisは次のように述べています。「Anthropicコンソールは優れたツールで、自動化された設計とプロンプト最適化プロセスで多くの時間を節約できます。生成されたプロンプトは完璧ではないかもしれませんが、迅速な反復の出発点を提供します。また、テストケース生成機能は非常に便利で、開発者はテスト用のデータが利用できないことが多いです。」

将来的には、プロンプトの作成をAnthropicに任せることができそうです。

詳細については、ドキュメントをご覧ください:https://docs.anthropic.com/en/docs/build-with-claude/prompt-engineering/overview。

Share this article

Related Posts

p>政治学の論文を書くという作業は、複雑な概念や詳細な分析が求められるため、 dauntingに思えるかもしれません。しかし、WriteGoのAIエッセイライターのようなAIライティングアシスタントの登場により、このような学術的な作業へのアプローチが変わり、プロセスが効率的で直感的になりました。このAIツールを活用して政治学の論文を書く方法をご紹介します。

span style="color:#16a085">こちらを見てください!