Controla Robots Remotamente con Apple Vision Pro, NVIDIA: 'La Integración Humano-Máquina No es Difícil'

Jensen Huang dijo: "La próxima ola de IA son los robots, y uno de los desarrollos más emocionantes son los robots humanoides." Hoy, el Proyecto GR00T ha dado un paso importante hacia adelante.

Ayer, el fundador de NVIDIA, Jensen Huang, habló sobre su modelo de robot humanoide de propósito general, "Proyecto GR00T", durante su discurso principal en SIGGRAPH 2024. El modelo ha recibido una serie de actualizaciones funcionales.

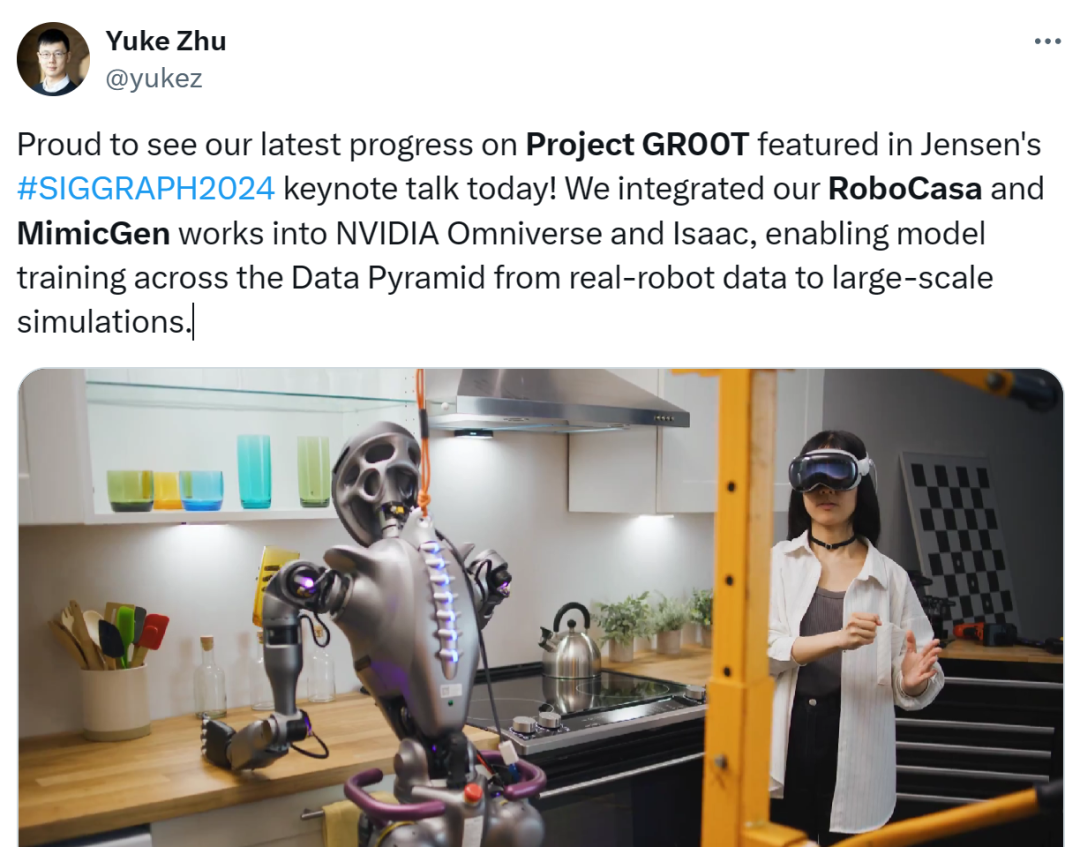

Yuke Zhu, profesor asistente en la Universidad de Texas en Austin y científico investigador senior en NVIDIA, tuiteó un video que demuestra cómo NVIDIA integró los marcos de entrenamiento de simulación de robots de uso doméstico a gran escala RoboCasa y MimicGen en la plataforma NVIDIA Omniverse y la plataforma de desarrollo de robots Isaac.

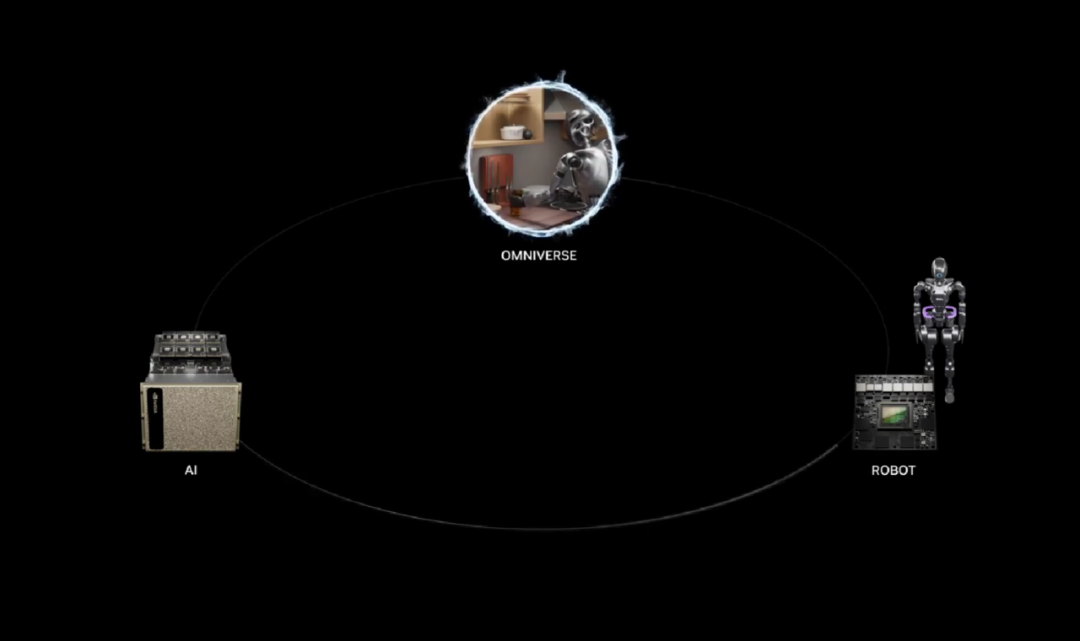

El video cubre las tres plataformas de computación de NVIDIA: IA, Omniverse y Jetson Thor, aprovechándolas para simplificar y acelerar los flujos de trabajo de los desarrolladores. A través de las capacidades combinadas de estas plataformas de computación, estamos a punto de entrar en una era de robots humanoides impulsados por IA física.

Entre los aspectos destacados está que los desarrolladores pueden usar Apple Vision Pro para controlar robots humanoides de forma remota y realizar tareas.

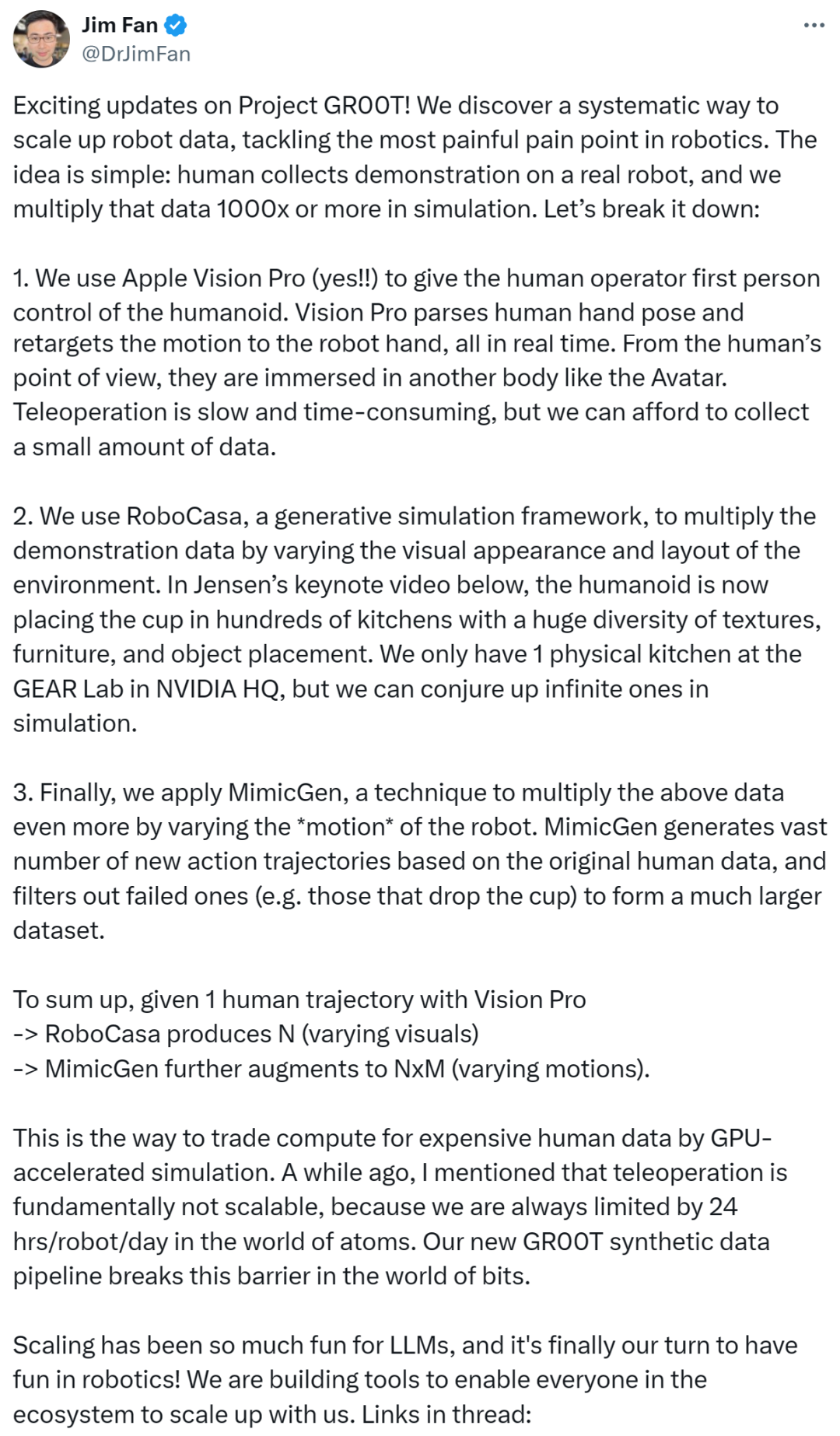

Mientras tanto, otro científico investigador senior en NVIDIA, Jim Fan, afirmó que las actualizaciones del Proyecto GR00T son emocionantes. NVIDIA utiliza un enfoque sistemático para escalar los datos de los robots, abordando algunos de los problemas más desafiantes en el campo de la robótica.

La idea es simple: los humanos recogen datos de demostración en robots reales, y NVIDIA escala estos datos mil veces o más en simulaciones. Con simulaciones aceleradas por GPU, las personas ahora pueden utilizar el poder computacional para reemplazar el proceso de recolección de datos humanos, que consume mucho tiempo, es laborioso y costoso.

Él mencionó que no hace mucho tiempo, creía que la operación remota era fundamentalmente no escalable porque, en el mundo atómico, siempre estamos limitados por el límite de 24 horas por robot por día. La nueva tubería de datos sintéticos utilizada en GR00T rompe esta limitación en el mundo digital.

Respecto a los últimos avances de NVIDIA en robótica humanoide, un internauta comentó que Apple Vision Pro ha encontrado su caso de uso más genial.

NVIDIA comienza a liderar la próxima ola: IA física.

NVIDIA también detalló el proceso técnico de acelerar los robots humanoides en una publicación de blog. Aquí está el contenido completo:

Para acelerar el desarrollo de robots humanoides en todo el mundo, NVIDIA anunció un conjunto de servicios, modelos y plataformas de computación para los principales fabricantes de robots, desarrolladores de modelos de IA y creadores de software a nivel global, permitiéndoles desarrollar, entrenar y construir la próxima generación de robots humanoides.

Este conjunto incluye los nuevos microservicios y marcos NVIDIA NIM para simulación y aprendizaje de robots, el servicio de orquestación NVIDIA OSMO para ejecutar cargas de trabajo robóticas de múltiples etapas, y el flujo de trabajo de operación remota que admite IA y simulación, lo que permite a los desarrolladores entrenar robots con datos de demostración humanos mínimos.

Jensen Huang declaró: "La próxima ola de IA son los robots, y uno de los desarrollos más emocionantes son los robots humanoides. Estamos avanzando en toda la pila de robots de NVIDIA, abriéndola a los desarrolladores y empresas de robots humanoides en todo el mundo, permitiéndoles utilizar las plataformas, bibliotecas aceleradas y modelos de IA que mejor se adapten a sus necesidades."

Acelerando el desarrollo con NVIDIA NIM y OSMO.

Los microservicios NIM ofrecen contenedores preconstruidos impulsados por el software de inferencia de NVIDIA, reduciendo el tiempo de implementación de semanas a minutos.

Dos nuevos microservicios de IA permitirán a los expertos en robótica mejorar la generación de flujos de trabajo de simulación de IA física en NVIDIA Isaac Sim.

El microservicio MimicGen NIM genera datos de movimiento sintético a partir de datos grabados de forma remota desde dispositivos de computación espacial como Apple Vision Pro. El microservicio Robocasa NIM genera tareas de robots y entornos de simulación en OpenUSD.

El servicio gestionado en la nube NVIDIA OSMO ya está disponible, permitiendo a los usuarios coordinar y escalar flujos de trabajo complejos de desarrollo de robots a través de recursos de computación distribuidos, ya sea en las instalaciones o en la nube. OSMO simplifica significativamente los flujos de trabajo de entrenamiento y simulación de robots, reduciendo los ciclos de implementación y desarrollo de meses a menos de una semana.

Proporcionando flujos de trabajo avanzados de captura de datos para desarrolladores de robots humanoides.

Entrenar los modelos fundamentales detrás de los robots humanoides requiere vastas cantidades de datos. Una forma de obtener datos de demostración humanos es a través de la operación remota, pero este método se está volviendo cada vez más costoso y que consume mucho tiempo.

Al mostrar el flujo de trabajo de referencia de operación remota de NVIDIA IA y Omniverse en la conferencia de gráficos computacionales SIGGRAPH, los investigadores y desarrolladores de IA pueden generar grandes cantidades de datos sintéticos de movimiento y percepción a partir de una cantidad mínima de demostraciones humanas capturadas de forma remota.

Primero, los desarrolladores capturan una pequeña cantidad de demostración remota usando Apple Vision Pro. Luego, simulan grabaciones en NVIDIA Isaac Sim y utilizan el microservicio MimicGen NIM para generar conjuntos de datos sintéticos a partir de las grabaciones.

Los desarrolladores utilizan tanto datos reales como sintéticos para entrenar el modelo fundamental del robot humanoide del Proyecto GR00T, ahorrando un tiempo significativo y reduciendo costos. Luego utilizan el microservicio Robocasa NIM en Isaac Lab, un marco de aprendizaje de robots, para generar experiencias para reentrenar el modelo del robot. A lo largo del flujo de trabajo, NVIDIA OSMO asigna sin problemas tareas computacionales a diferentes recursos, reduciendo la carga de gestión de los desarrolladores en semanas.

Expandiendo el acceso a las tecnologías de desarrollo de robots humanoides de NVIDIA.

NVIDIA ofrece tres plataformas de computación para simplificar el desarrollo de robots humanoides: el superordenador NVIDIA AI para entrenar modelos; NVIDIA Isaac Sim, construido sobre Omniverse, para que los robots aprendan y perfeccionen habilidades en un mundo simulado; y la computadora de robot humanoide NVIDIA Jetson Thor para ejecutar modelos. Los desarrolladores pueden acceder y utilizar toda o parte de estas plataformas según sus necesidades específicas.

A través del nuevo Programa de Desarrollo de Robots Humanoides de NVIDIA, los desarrolladores pueden obtener acceso anticipado a nuevos productos y las últimas versiones de NVIDIA Isaac Sim, NVIDIA Isaac Lab, Jetson Thor y el modelo fundamental de robot humanoide del Proyecto GR00T.

1x, Boston Dynamics, ByteDance, Field AI, Figure, Fourier, Galbot, LimX Dynamics, Mentee, Neura Robotics, RobotEra y Skild AI están entre las primeras empresas en unirse al programa de acceso anticipado.

Los desarrolladores ahora pueden unirse al Programa de Desarrollo de Robots Humanoides de NVIDIA para acceder a NVIDIA OSMO e Isaac Lab y pronto obtener acceso a los microservicios NVIDIA NIM.

Enlace al blog: https://nvidianews.nvidia.com/news/nvidia-accelerates-worldwide-humanoid-robotics-development

Sigue a WriteGo para obtener la última información sobre IA

Share this article

Related Posts

p>La nueva tendencia de la escritura con IA está revolucionando la forma en que abordamos las tareas de escritura, haciendo que el proceso sea significativamente más fácil y eficiente. Con herramientas como el Escritor de Ensayos de WriteGo, las personas pueden aprovechar el poder de la inteligencia artificial para crear ensayos, trabajos de investigación y artículos de alta calidad con un esfuerzo mínimo. Esta innovación no solo se trata de simplificar el proceso de escritura; se trata de mejor

class="header_faab3 auto-hide-last-sibling-br">Introducción: La Revolución de la Escritura con IA