Non Sai Come Scrivere i Prompt? Guarda Qui!

Non Sai Come Scrivere Prompt? Guarda Qui!

Nel campo dello sviluppo di applicazioni AI, la qualità dei prompt influisce significativamente sui risultati. Tuttavia, creare prompt di alta qualità può essere una sfida, richiedendo ai ricercatori di avere una profonda comprensione delle esigenze dell'applicazione e competenze nei modelli linguistici di grandi dimensioni. Per accelerare lo sviluppo e migliorare i risultati, la startup AI Anthropic ha semplificato questo processo, rendendo più facile per gli utenti creare prompt di alta qualità.

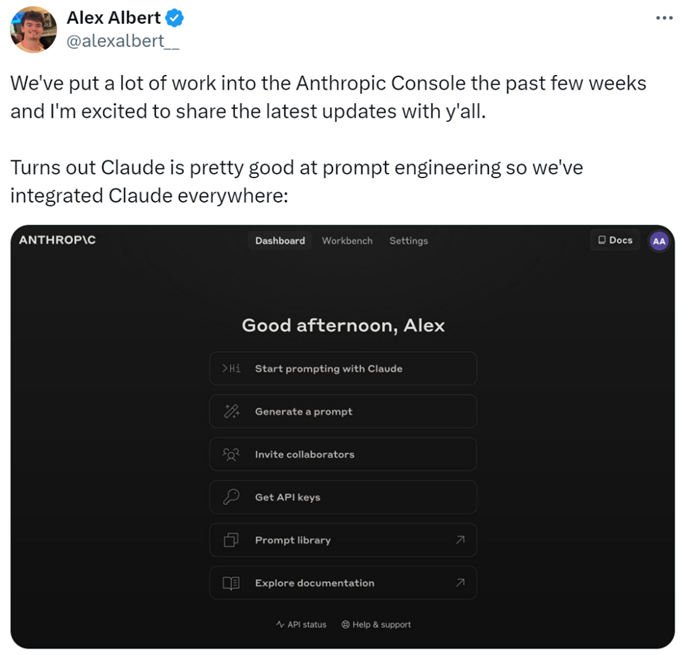

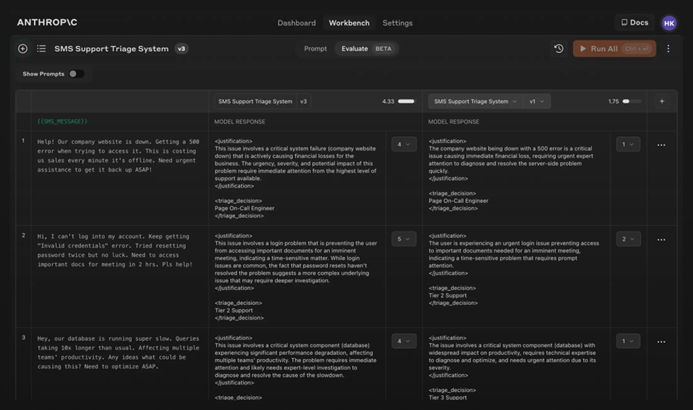

Specificamente, i ricercatori hanno aggiunto nuove funzionalità alla Console Anthropic, consentendo la generazione, il testing e la valutazione dei prompt.

Il prompt engineer di Anthropic, Alex Albert, ha dichiarato: "Questo è il risultato di un lavoro significativo nelle ultime settimane, e ora Claude eccelle nell'ingegneria dei prompt."

Prompt Difficili? Lascialo a Claude

Con Claude, scrivere un buon prompt è semplice come descrivere il compito. La console include un generatore di prompt integrato alimentato da Claude 3.5 Sonnet, che consente agli utenti di descrivere compiti e far generare a Claude prompt di alta qualità.

Generazione di Prompt: Prima, clicca su "Genera Prompt" per entrare nell'interfaccia di generazione dei prompt:

Poi, inserisci la descrizione del compito, e Claude 3.5 Sonnet convertirà la descrizione del compito in un prompt di alta qualità. Per esempio, "Scrivi un prompt per la revisione dei messaggi in entrata...", quindi clicca su "Genera Prompt."

Generazione di Dati di Test: Se gli utenti hanno un prompt, potrebbero aver bisogno di alcuni casi di test per eseguirlo. Claude può generare quei casi di test.

Gli utenti possono modificare i casi di test secondo necessità ed eseguire tutti i casi di test con un solo clic. Possono anche visualizzare e regolare la comprensione di Claude dei requisiti per ogni variabile, consentendo un controllo più fine sulla generazione dei casi di test di Claude.

Queste funzionalità rendono più facile ottimizzare i prompt, poiché gli utenti possono creare nuove versioni dei prompt e rieseguire il test per iterare rapidamente e migliorare i risultati.

Inoltre, Anthropic ha impostato una scala a cinque punti per valutare la qualità delle risposte di Claude.

Valutazione del Modello: Se gli utenti sono soddisfatti del prompt, possono eseguirlo contro vari casi di test nella scheda "Valutazione". Gli utenti possono importare dati di test da file CSV o utilizzare Claude per generare dati di test sintetici.

Confronto: Gli utenti possono testare più prompt tra loro nei casi di test e valutare le risposte migliori per monitorare quale prompt funziona meglio.

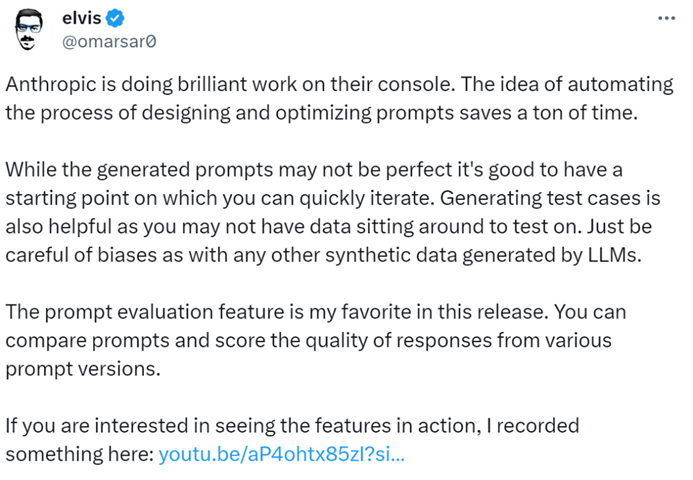

Il blogger AI @elvis ha dichiarato: "La Console Anthropic è uno strumento eccezionale, che fa risparmiare molto tempo con il suo design automatizzato e il processo di ottimizzazione dei prompt. Anche se i prompt generati potrebbero non essere perfetti, forniscono un punto di partenza per una rapida iterazione. Inoltre, la funzionalità di generazione dei casi di test è molto utile, poiché gli sviluppatori potrebbero non avere dati disponibili per il testing."

Sembra che in futuro, scrivere prompt possa essere affidato ad Anthropic.

Per ulteriori informazioni, controlla la documentazione: https://docs.anthropic.com/en/docs/build-with-claude/prompt-engineering/overview.

Share this article

Related Posts

p>Il compito di scrivere un saggio di scienze politiche può sembrare scoraggiante, specialmente data la complessità dei concetti e l'analisi approfondita che tali lavori richiedono spesso. Tuttavia, l'emergere di assistenti alla scrittura IA come l'AI Essay Writer di WriteGo ha trasformato il modo in cui affrontare tali compiti accademici, rendendo il processo sia efficiente che intuitivo. Ecco come puoi sfruttare questo strumento IA per scrivere il tuo saggio di scienze politiche.

span style="color:#16a085">Prompt